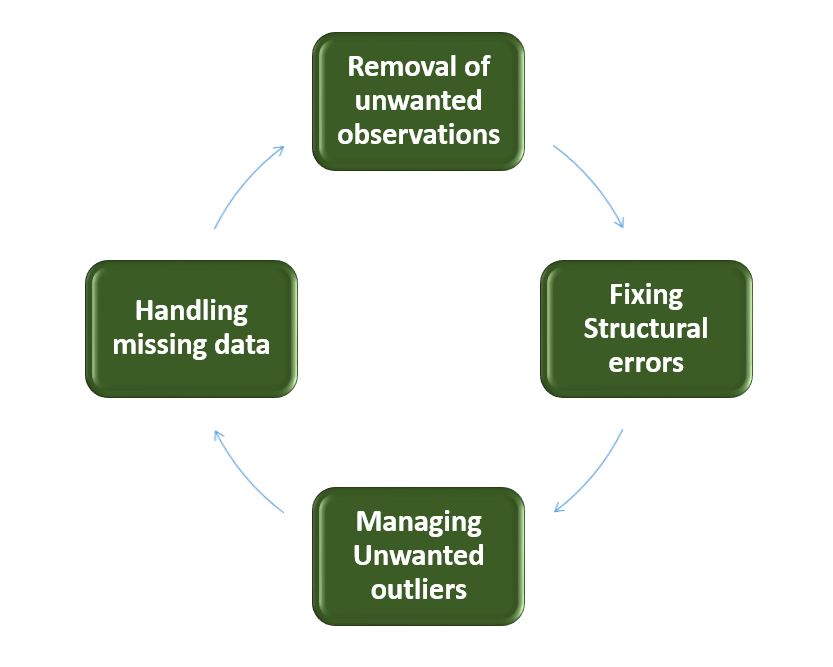

Data cleaning techniques involve removing inaccuracies, correcting errors, and filling in missing data. These steps ensure data quality and reliability.

Data cleaning is a crucial step in data analysis and management. It helps improve data accuracy and consistency, making it reliable for decision-making. Common techniques include removing duplicates, handling missing values, and correcting inconsistencies. Data cleaning also involves standardizing data formats and validating data against predefined rules.

Effective data cleaning can significantly enhance the quality of insights derived from data. Businesses rely on clean data to make informed decisions, optimize processes, and drive growth. Therefore, investing time and resources in data cleaning is essential for any organization aiming for data-driven success.

Credit: www.capellasolutions.com

Introduction To Data Cleaning

Data cleaning is a crucial step in data analysis. It ensures your data is accurate and usable. Clean data leads to better insights and decisions.

Importance Of Clean Data

Clean data means accurate and consistent information. It is essential for reliable analysis. Without clean data, results can be misleading.

Clean data helps in making better decisions. It reduces errors and increases efficiency. It also saves time and resources.

Common Data Issues

Dirty data can have many problems. Here are some common issues:

- Missing values: Data entries are incomplete.

- Duplicates: Same data appears multiple times.

- Inconsistent formats: Data is not in a uniform style.

- Outliers: Unusual data points that deviate from the norm.

- Typos and errors: Mistakes in data entry.

Handling these issues is vital. It ensures the integrity of your data.

| Data Issue | Description |

|---|---|

| Missing Values | Incomplete data entries. |

| Duplicates | Repeated data entries. |

| Inconsistent Formats | Data in different styles. |

| Outliers | Data points that are far from others. |

| Typos and Errors | Incorrect data due to entry mistakes. |

Data Profiling

Data profiling is a crucial step in data cleaning. It involves examining data sources to understand their structure, content, and interrelationships. This process helps identify potential issues that could affect data quality.

Understanding Data Quality

Understanding data quality is essential for effective data profiling. Data quality refers to the condition of data based on factors like accuracy, completeness, consistency, reliability, and relevance. High-quality data ensures better decision-making and operational efficiency.

- Accuracy: Ensures data is correct and free from errors.

- Completeness: Ensures all required data is present.

- Consistency: Ensures data is uniform across different sources.

- Reliability: Ensures data is dependable over time.

- Relevance: Ensures data is applicable to the context.

Identifying Data Anomalies

Identifying data anomalies is a key aspect of data profiling. Anomalies are irregularities or deviations in data that can affect its quality. Detecting and correcting these anomalies is vital for maintaining data integrity.

| Anomaly Type | Description | Example |

|---|---|---|

| Missing Data | Data that is absent where it should be present. | Missing values in a dataset. |

| Duplicate Data | Repeated entries in a dataset. | Duplicate customer records. |

| Inconsistent Data | Data that does not match across sources. | Different formats for dates. |

| Outliers | Data points that differ significantly from others. | Extremely high or low values. |

Detecting these anomalies involves using various techniques. These include statistical analysis, data visualization, and automated tools. Correcting these anomalies ensures data is clean and reliable.

Handling Missing Data

Dealing with missing data is crucial in data cleaning. Missing data can lead to inaccurate analysis. Various techniques can help manage missing data effectively.

Imputation Methods

Imputation involves filling in missing values with substitute values. It ensures the dataset remains complete. There are several common imputation methods:

- Mean Imputation: Replace missing values with the mean of the column.

- Median Imputation: Use the median value of the column for missing values.

- Mode Imputation: Fill in missing values with the mode (most frequent value).

- K-Nearest Neighbors (KNN): Use the values from the nearest neighbors to impute missing data.

For example, consider the following table of data:

| Age | Salary |

|---|---|

| 25 | 50000 |

| 30 | 60000 |

| 28 | ? |

| 35 | 80000 |

If the salary for age 28 is missing, we can use the mean salary (63333) to fill it in.

Dropping Missing Values

Another approach is dropping missing values. This method removes rows or columns with missing data. It is simple but can lead to data loss. Use this method when the missing values are few.

Consider the same table:

| Age | Salary |

|---|---|

| 25 | 50000 |

| 30 | 60000 |

| 28 | ? |

| 35 | 80000 |

We can drop the row with the missing salary value:

| Age | Salary |

|---|---|

| 25 | 50000 |

| 30 | 60000 |

| 35 | 80000 |

This results in a smaller but complete dataset.

Removing Duplicates

Removing duplicates is a crucial step in data cleaning. Duplicate records can cause inaccurate analysis, wasted resources, and misleading insights. Effective techniques for identifying and removing duplicates ensure data integrity and reliability.

Identifying Duplicate Records

Identifying duplicate records is the first step. You can use various methods to spot duplicates:

- Exact Match: Check if all fields match exactly.

- Partial Match: Use specific fields to find potential duplicates.

- Fuzzy Matching: Identify records with similar but not identical data.

Here is a simple example in Python:

import pandas as pd

data = {'ID': [1, 2, 3, 2, 4],

'Name': ['Alice', 'Bob', 'Charlie', 'Bob', 'Eve']}

df = pd.DataFrame(data)

duplicates = df[df.duplicated()]

print(duplicates)

Strategies For Removal

After identifying duplicates, use different strategies to remove them. Some common strategies are:

- Remove All Duplicates: Delete all duplicate records.

- Keep First Record: Retain the first occurrence and remove others.

- Keep Last Record: Retain the last occurrence and remove others.

Here is a code snippet to remove duplicates in Python:

# Keep the first occurrence

df_unique = df.drop_duplicates(keep='first')

# Keep the last occurrence

df_unique = df.drop_duplicates(keep='last')

# Remove all duplicates

df_unique = df.drop_duplicates(keep=False)

By following these techniques, you can ensure your data is clean and reliable.

Data Standardization

Data Standardization is a critical step in data cleaning. It ensures that data follows a consistent format. This process makes data easier to analyze. Without standardization, data can be messy and hard to work with.

Consistent Formats

Consistent formats mean data follows the same pattern. For example, dates should follow a single format. Use either “MM/DD/YYYY” or “YYYY-MM-DD” throughout your dataset.

Phone numbers should also be standardized. Choose a format like “(123) 456-7890” or “123-456-7890”. Ensure all phone numbers in your data follow this format.

Addresses need standardization too. Use abbreviations like “St.” for “Street” and “Ave.” for “Avenue”. This ensures consistency and makes searching easier.

Normalization Techniques

Normalization involves organizing data to reduce redundancy. This process helps in cleaning and maintaining data.

Here are some common normalization techniques:

- Remove Duplicates: Ensure each record is unique.

- Split Data: Divide complex data into simpler parts.

- Use Codes: Replace long entries with short codes. For example, replace “New York” with “NY”.

Using these techniques ensures your data is clean and ready for analysis.

Dealing With Outliers

Dealing with outliers is crucial in data cleaning. Outliers can skew results. They affect the quality of analysis. Handling them properly ensures accurate data insights.

Detecting Outliers

Detecting outliers is the first step. Various methods can help. Common techniques include:

- Z-score: Measures how far a value is from the mean.

- IQR (Interquartile Range): Detects outliers based on quartiles.

- Box plots: Visual representation of data spread.

Using these methods, you can spot unusual data points. Below is a table showing common thresholds:

| Method | Threshold |

|---|---|

| Z-score | > 3 or < -3 |

| IQR | 1.5 IQR |

| Box plot | Outliers beyond whiskers |

Outlier Treatment

After detecting outliers, treating them is next. Common treatments include:

- Removal: Simply remove the outlier data points.

- Transformation: Apply transformations to reduce impact.

- Imputation: Replace outliers with a central value.

Choose the treatment based on data context. Be mindful of data integrity. Ensuring accurate data is crucial for analysis.

Outlier treatment helps in maintaining data quality. It improves the reliability of results.

Data Validation

Data validation is crucial in data cleaning. It ensures the accuracy and quality of data. By applying validation techniques, you can identify and correct errors. This step is essential for reliable data analysis. Let’s explore some key aspects of data validation.

Validation Rules

Validation rules are predefined criteria. They help in checking data accuracy. Here are some common types of validation rules:

- Range Check: Ensures data falls within a specific range.

- Format Check: Verifies data follows a specified format.

- Consistency Check: Ensures data is logically consistent.

- Presence Check: Confirms that no essential data is missing.

These rules help maintain data integrity. They ensure that data meets set standards.

Automated Tools

Automated tools simplify data validation. They save time and reduce errors. Some popular data validation tools include:

| Tool | Features |

|---|---|

| OpenRefine | Clean and transform data efficiently. |

| Trifacta Wrangler | Intuitive interface for data cleaning. |

| DataCleaner | Data profiling and validation capabilities. |

These tools automate data validation. They make data cleaning more manageable.

Combining validation rules and automated tools ensures high-quality data. This leads to more accurate analysis and better decision-making.

Credit: www.ccslearningacademy.com

Best Practices

Data cleaning is essential for any data-driven project. Ensuring clean data leads to more accurate insights and decisions. Here, we discuss the best practices to maintain clean data. These practices help in achieving reliable and efficient data handling.

Regular Audits

Regular audits help in maintaining data quality. Conduct audits periodically to spot and correct errors. This ensures that data remains accurate over time.

- Schedule audits on a monthly or quarterly basis.

- Use automated tools to streamline the audit process.

- Document all findings and corrections.

Regular audits also help in identifying patterns of common errors. By analyzing these patterns, preventive measures can be established.

Documentation

Proper documentation is crucial for effective data cleaning. It serves as a reference for future data management activities.

- Create a data dictionary. This helps in understanding the data attributes.

- Document all cleaning procedures. This ensures consistency.

- Keep records of all data sources. This aids in traceability.

| Documentation Element | Purpose |

|---|---|

| Data Dictionary | Defines data attributes |

| Cleaning Procedures | Ensures consistent practices |

| Data Sources | Aids in data traceability |

Good documentation practices ensure that everyone understands the data cleaning processes. This fosters better collaboration among team members.

Credit: www.geeksforgeeks.org

Frequently Asked Questions

What Is Data Cleaning?

Data cleaning is the process of detecting and correcting errors in a dataset. It involves removing duplicates, correcting errors, and filling in missing values. Data cleaning ensures the data is accurate, consistent, and usable for analysis.

Why Is Data Cleaning Important?

Data cleaning is crucial for accurate data analysis. It removes errors and inconsistencies, ensuring reliable results. Clean data improves decision-making and enhances the quality of insights derived from the data.

How To Handle Missing Data?

Handling missing data involves several techniques. You can delete rows with missing values, replace them with mean or median values, or use algorithms to predict and fill them in. The method chosen depends on the dataset and analysis goals.

What Tools Are Used For Data Cleaning?

Several tools are available for data cleaning. Popular ones include Python libraries like Pandas, R programming language, and software like Excel and OpenRefine. These tools offer functionalities to automate and simplify the data cleaning process.

Conclusion

Mastering data cleaning techniques is essential for accurate analysis and decision-making. By implementing effective methods, you ensure data reliability. Clean data enhances insights, reduces errors, and boosts overall efficiency. Embrace these techniques to maintain high-quality datasets, leading to better outcomes in your projects.

Prioritize data cleaning for a successful data-driven future.